Chapter 07

Alternative Crypto- currencies and their Differences

Not long after the inception of Bitcoin, many alternative cryptocurrencies followed. This chapter aims to tell the story of altcoins, their emergence, as well as the technological differences between them and Bitcoin. Thus far, they have flooded the Internet in the multitude of thousands. Many of them did not survive more than a few months, and the vast majority of them ended up in the junkyard of the Internet. The open source nature of Bitcoin as well as many other technologies in the crypto space naturally drew the attention and interest of many who came up with innovative modifications to the Bitcoin protocol itself, or who created brand new protocols from scratch intending to be a Bitcoin 2.0. The motivation to do so arguably varied in each case: from straight-forward performance improvement such as increasing the transaction throughput, or architectural innovation and changes to achieve greater decentralization and censorship-resistance, all the way to designing intricate get-rich-quick schemes that were a very lucrative business for certain time period.

“But Bitcoin is better”, would often be the response of a Bitcoin maximalist. Well, indeed, Bitcoin is the one and only, that is undeniably true. It has the biggest network effect, community, ecosystem, as well as the most work POW chain, and thus security. Nonetheless, in the chronicles of cryptocurrencies, there is space for much more than a single set of ideals and space for revolutionary innovation on many fronts. The ecosystem has been extremely vivid, especially after 2013. New alternative cryptocurrencies, or altcoins as they came to be known, began popping up at an increasing rate. While many made frivolous changes to their codebase and were labeled, rightfully, as copycats, others sought technology-enhancing modifications in the spirit of innovation and breakthroughs. It is the goal of this chapter to explore the beautiful blend of similarity and differences amongst them.

The very first “altcoin” that emerged was aptly called Namecoin. Its network was announced in the Bitcointalk forum, and launched in April 2011. It was a fork of the Bitcoin reference software and uses the same hashing algorithm and monetary policy with a creation cap on 21 millions coins. Namecoin’s purpose was to bring decentralization by applying blockchain to certain components of the Internet infrastructure, mainly DNS (Domain Name System) and identities. It should allow, for example, decentralized TLS (HTTPS) certificate validation — the idea that was later on picked up by engineers within the Ethereum ecosystem, which resulted into conception of the Ethereum Name Service (ENS), and subsequently other projects such as Handshake and Blockstack.All these projects aim to create a more decentralized alternative to the current DNS that is a hierarchical global structure under supervision of the Internet Corporation for Assigned Names and Numbers (ICANN), a non-profit organization based in Los Angeles that allocates top-level domains like .com, .org, .net and others. But not only that. The most important distinct feature of these systems is that they allow users to truly own the domain. In DNS today, users typically rent domains from other corporations that were lucky enough to be selected to run them.In the case of .com domains, for instance, the chosen one is Verisign. The company earns billions in revenues annually for their administration. Should these projects succeed, they may bring distribution of power and wealth within the domain system. A startup called Unstoppable Domains, building domains in different blockchains, has even launched .crypto domains. Even though they reside on top of Ethereum, they allow users to link their different cryptocurrency addresses under a single domain. For instance, if you like this book, you can tip me in Bitcoin, Litecoin, Ether, Dai, or Dash just by sending any of them to my domain — stanceldavid.crypto. As of 2020, there are several wallets that allow you to do this including MyEtherWallet, MyCrypto, Coinomi, Atomic, Burner Wallet, and many others. Moreover, Unstoppable Domains also allows you to publish your own website, linked to the same domain, hosted on the Internet Planetary File System. By this time, they should be accessible through Opera or Brave browsers. This creates a powerful combination of uncensorable websites with uncensorable payments that may be handy in the future.

The idea of an alternative to DNS was already discussed on Bitcointalk forum in mid-2010 in a thread about a hypothetical system called BitDNS. What is interesting about this idea, among other things, is that it received support from Satoshi Nakamoto himself, as well as other Bitcoin Core developers such as Gavin Andresen and Jeff Garzik. In a discussion on December 2010, Satoshi elaborated on technical options to implement such a system:

I think it would be possible for BitDNS to be a completely separate network and separate blockchain, yet share CPU power with Bitcoin. The only overlap is to make it so miners can search for proof-of-work for both networks simultaneously.The networks wouldn't need any coordination. Miners would subscribe to both networks in parallel. They would scan SHA such that if they get a hit, they potentially solve both at once. A solution may be for just one of the networks if one network has a lower difficulty… …Instead of fragmentation, networks share and augment each other's total CPU power. This would solve the problem that if there are multiple networks, they are a danger to each other if the available CPU power gangs up on one. Instead, all networks in the world would share combined CPU power, increasing the total strength. It would make it easier for small networks to get started by tapping into a ready base of miners.

What Satoshi described here eventually became known as “merge mining” and it was implemented later on by Namecoin, as well as by Peercoin, RSK, and some others a few years later. It is a clever way to secure smaller networks as it incentivizes miners to submit their proofs of work to multiple networks, and to potentially get rewarded more than once for the same work (and electricity costs). As he clarifies later in the same thread:

The incentive is to get the rewards from the extra side chains also for the same work. While you are generating bitcoins, why not also get free domain names for the same work? If you currently generate 50 BTC per week, now you could get 50 BTC and some domain names too. You have one piece of work. If you solve it, it will solve a block from both Bitcoin and BitDNS…

Interestingly enough, Namecoin is one of a few coins from that era that are still around. It has active development, and market capitalization of $10M, which places it in the third hundred of coins on the CMC (Coinmarketcap.com). A few months after Namecoin, in October 2011, another “evergreen” was announced and subsequently launched — Litecoin. Litecoin was created as “silver to Bitcoin’s gold”, and as a reaction to a decent amount of cryptocurrencies that preceded it with the similar goal, but had some problems associated with them. Charlie Lee, the creator of Litecoin and former Google and Coinbase employee, elaborated on these problems in his Bitcointalk announcement, including issues with premine (Tenebrix), low hash rate (GeistGeld), and exhibited signs of pump-and-dump schemes (Ixcoin). Some of the coins reportedly had problematic launches because of bad configuration, or even seemed to play shady games while launching but not releasing their source code.

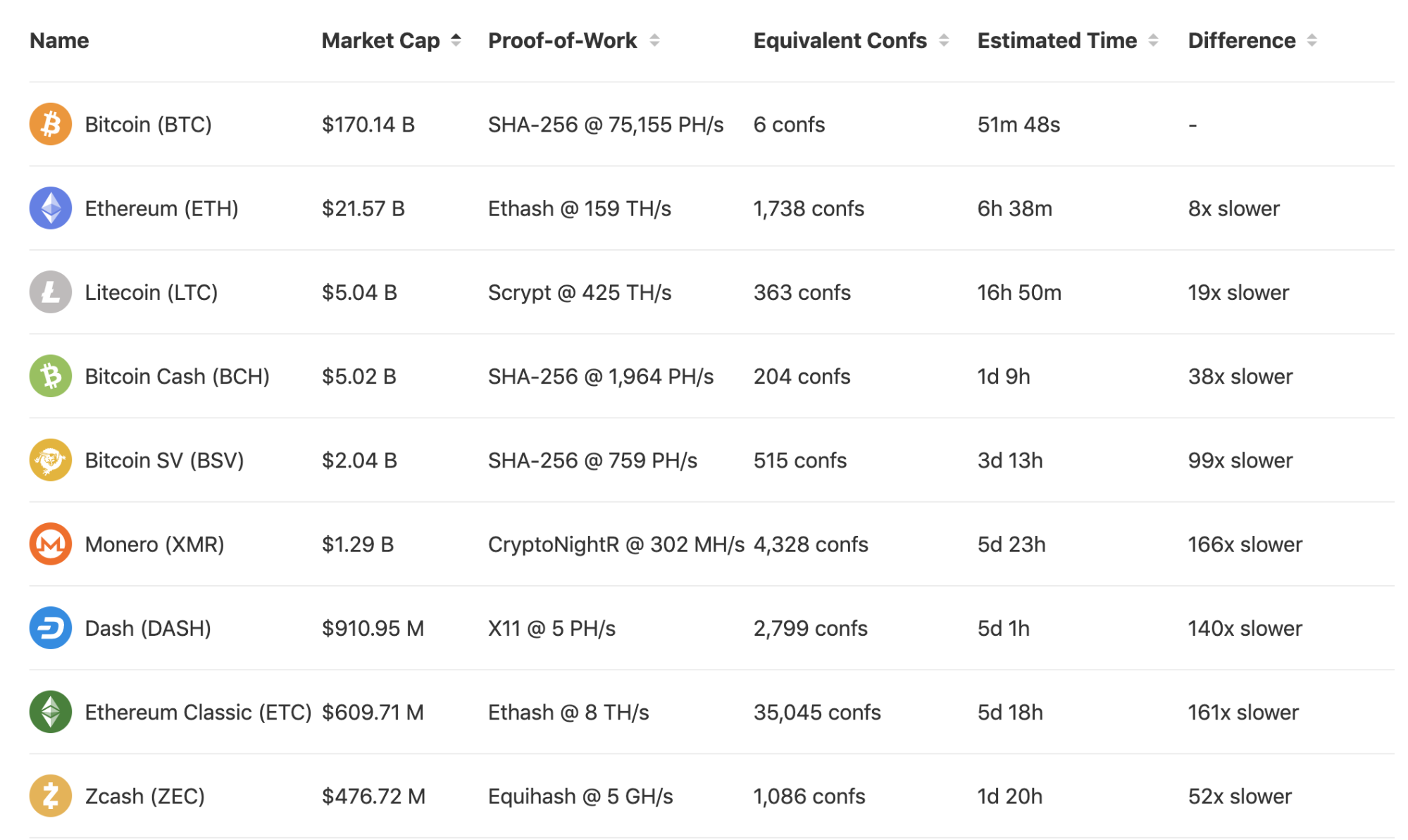

Litecoin came with a few simple modifications of the Bitcoin source code. It increased the cap of its supply fourfold, and it cut the block time by four to 2.5 minutes, thus making its transactions seemingly four times faster. The reasoning behind this was to make it more convenient for merchants to accept faster regular transactions that don’t need to be as secure, as they have less (computational) work per confirmation, while also making an argument that if a merchant wants Bitcoin-like level of security, they just need to wait for four confirmations. In reality, this was rather misleading, as the security of a transaction stems from the hash rate and difficulty of the network securing the ledger, not block time which is essentially an arbitrary number. As a matter of fact, in summer of 2019, a Litecoin transaction needed over 60 confirmations, or roughly 2.5 hours, to achieve a similar level of security, as provided by one Bitcoin confirmation.

Source: howmanyconfs.com

Arguably, from the set of protocol modifications introduced by Litecoin, the biggest innovation was the new hashing algorithm it implemented: Scrypt. It was adopted from Tenebrix, likely the first cryptocurrency to use this algorithm. Scrypt uses a sequential memory-hard function requiring asymptotically more memory than an algorithm which is not memory-hard, and thus making ASICs (Application Specific Integrated Circuit) for Litecoin mining more expensive to produce, when compared to Bitcoin mining.

As mentioned earlier, the open-source environment surrounding the cryptocurrency ecosystem, together with the tremendous sense of humor and creativity of the crypto community, triggered a true, in the words of Andreas Antonopoulos, “Tsunami of Innovation”. However, it wasn’t until 2013 that cryptocurrencies began to pop up at an accelerating rate. While some coins tweaked the coin properties based on different ideological or philosophical frameworks, others began to experiment with various technological aspects. Some were even created just out of straightforward boredom or as jokes. Freicoin for instance belonged to the category of those that attempted to bake into the code distinctive economic principles. It used the same hashing algorithm and block time, but introduced something called “demurrage,” which usually means the cost of holding something for a long time. It built up on the ideas of German economist and libertarian socialist Silvio Gessel, who suggested demurrage as a way to force the circulation of money and stimulate the economy. You can think of it as inflation controlled through taxes on a stable money supply rather than an untaxed money supply that expands slowly but steadily, as with most cryptocurrencies. In simple words, demurrage imposes a tax on holding a currency. As Freicoin’s developer Mark Friedenbach told Wired in 2013:

[Demurrage] can be thought of as causing freicoins to rot, reducing them in value by ~4.9 percent per year. Now to answer the question as to why anybody would want that, you have to look at the economy as a whole. Demurrage causes consumers and merchants both to spend or invest coins they don't immediately need, as quickly as possible, driving up GDP. Further, this effect is continuous with little seasonal adjustments, so one can expect business cycles to be smaller in magnitude and duration. With demurrage, one saves money by making safe investments rather than letting money sit under the mattress.

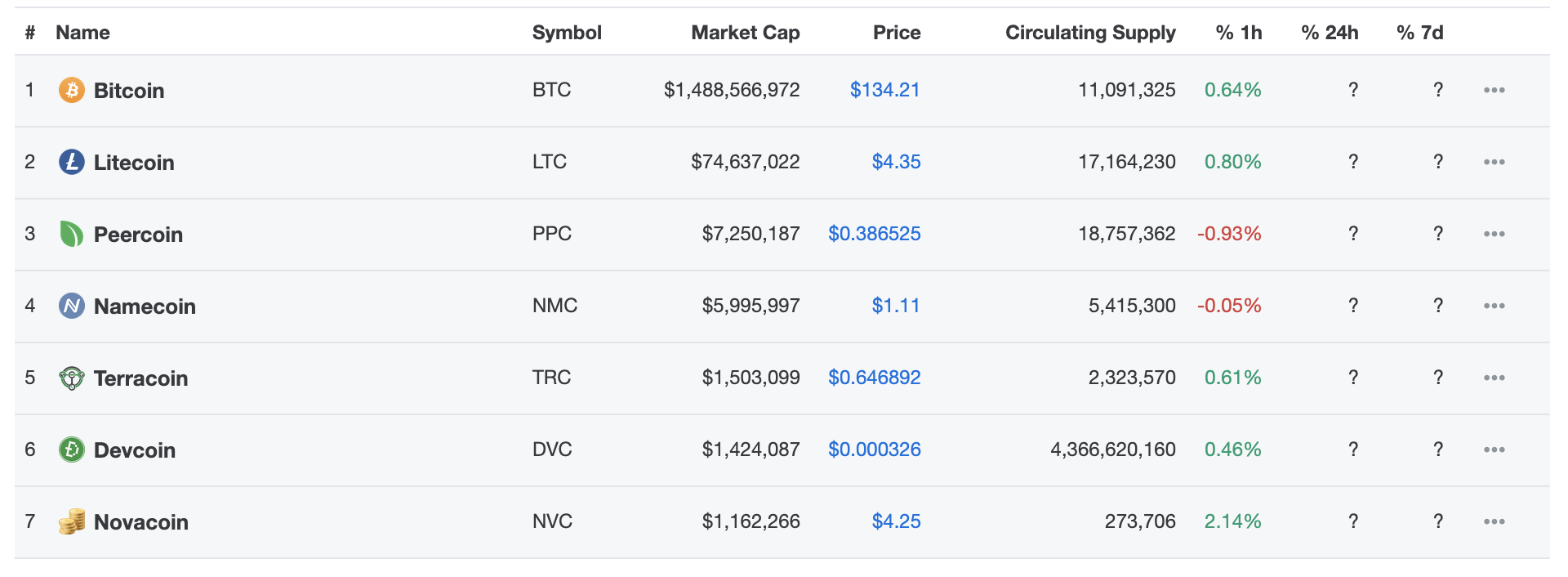

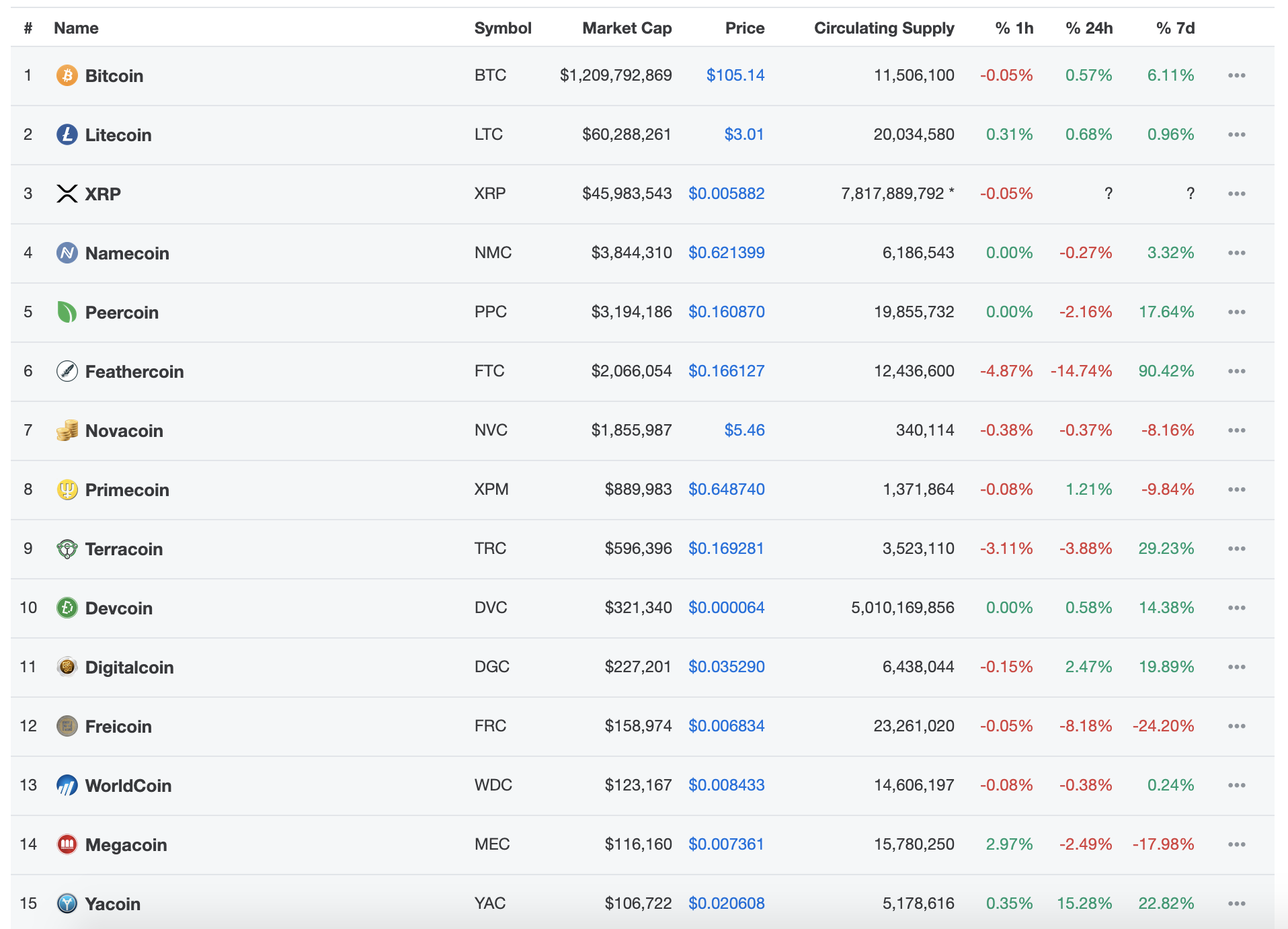

Source: CoinMarketCap

But adherents of different economic philosophies were not the only ones to have their own coins. Soon entire nations followed suit, though not led directly by governments. Auroracoin, a fork of Litecoin, was created in early 2014 as an Icelandic alternative to Bitcoin and the Icelandic króna. It was supposed to be airdropped to all the citizens of Iceland, free of charge. At the end of this process, just over 10% of Icelandic population claimed their auroracoins. Even though Auroracoin arguably lacked any fundamental value, at some point in 2014 it was in the Top 10 cryptocurrencies based on the market capitalization, reaching a ludicrous valuation of over $1 billion USD. In 2021, Auroracoin still has not achieved any meaningful adoption (even amongst Icelanders) or ecosystem maturity. Nonetheless, it started the trend that spread further to Spain and Cyprus in the form of Spaincoin and Aphroditecoin, respectively.

The fun side of the crypto community made its face known in the series of events that led to creation of one of the most famous cryptocurrencies of all times — Dogecoin — a cryptocurrency based on a meme. The image of a Shiba Inu dog, of the notoriously known Internet meme “Doge”, has become iconic. This community-centric effort became best known, apart from exhibition of great sense of humor, for a number of events crowdsourced by users, including sending a Jamaican bobsled team to the 2014 Winter Olympic Games in Sochi, Russia, and sponsoring a NASCAR driver’s car with Doge on the paint scheme. Perhaps unsurprisingly, the crypto frenzy of late 2017 which peaked in January 2018 pushed Dogecoin’s valuation to an astonishing $2 billion USD. In spring 2021 its market capitalization rose to an unbelievable $40 billion USD. That is two times the valuation of Deutsche Bank. Moreover, at the time of writing this chapter, Dogecoin’s subreddit has over 2.1 million subscribers, ironically much more than many of the serious crypto projects. Chances are this is partially due to billionaire industrialist Elon Musk’s tweets in support of Dogecoin, which caused a rollercoaster in crypto prices in early 2021.

There were also projects that truly aimed to push new technological innovations forward. Peercoin was one such project. It launched in August 2012 as PPCoin and was later rebranded. Its place in the annals of crypto history is attributed to its creators,Scott Nadal and Sunny King, pioneering Peercoin with a whole new realm of Proof-of-Stake (PoS) consensual mechanisms. Their introduction of a PoS-based system sparkled a tremendous amount of research activity in the field of distributed consensus mechanisms. Since their PoS algorithm does not involve any computational work, as it distributes coins proportionally to one’s stake in the system, it immediately gained many aficionados in the eco-friendly sector of the crypto community. This feature, unfortunately, came at certain costs, more precisely at a decreased level of decentralization and network security, when compared to PoW systems. It was a design trade-off that resulted in a necessity of “administrators” that sign checkpoints of every batch of blocks with their trusted public key. This served as a safeguard against network attacks, but essentially created a single point of failure, and thus made the protocol inferior when it comes to network security. The quest to solve the riddle related to more distributed checkpoint solutions to PoS systems has been ongoing ever since.

In the beginning of August in 2013, there were 50 cryptocurrency projects listed at Coinmarketcap.com. For the first time, the list also included Ripple, a cryptocurrency despised by the vast majority of the Bitcoin community, so much so that just the sheer act of calling it a cryptocurrency (as it is the case in this book) ignites flame wars. Interestingly enough, Bitcoin and Ripple have overlap in their code contributors, including Eric Lombrozo, who is much more known for his work on Bitcoin. Another interesting fact is that Ripple was co-founded by Jed McCaleb, the same person who founded the infamous (at the time), and largest Bitcoin exchange Mt.Gox, the fate of which we discussed in a previous chapter.

The two coins are vastly different in many aspects, including architecture, degree of decentralization, trust assumptions, emission regime, and ultimately also the end goal of their respective networks. While Bitcoin was designed to operate in the wilderness of the free and open Internet, Ripple was proposed to provide their own real-time gross settlement system, which would serve the banks and the financial institutions that Bitcoin aimed to replace. Their protocol competes with the SWIFT network rather than Bitcoin. Unlike Bitcoin, Ripple’s protocol relies on a subset of trusted entities known as the Unique Node List (UNL), which in 2020 consisted of a few dozen nodes, whereas Bitcoin’s full node list counts roughly 10,000 nodes. Given this architectural difference, Ripple’s network can cope with a much larger number of transactions at lower fees. Reportedly, Ripple’s network was consistently handling 1500 transactions per second in September 2018, with potential throughput of up to 50,000 transactions per second, which is near the capacity of the Visa card network. Ripple premined its whole coin supply of 100 billion tokens at launch of the network, while only 38 billion of the coins are available for the market. The rest is held in reserve by the company itself. This setup has been vocally criticized by many in the Bitcoin community.

The popularity, and perhaps to some extent quality, of a protocol is often indicated by the number of projects forked from its codebase. In Bitcoin’s case, by 2019, it has been forked over 23,000 times, according to its Github data. For Ripple, that number is less than 1,000. Likely the most well known cryptocurrency that was partially built on the Ripple codebase is Stellar. The two share many similar network properties, and in many ways Stellar has been competing with Ripple in its goal to onboard banks to its network, especially after IBM announced their plans to use Stellar as an underlying network for their global payments system, named World Wire in early 2019.

Source: CoinMarketCap

Differences

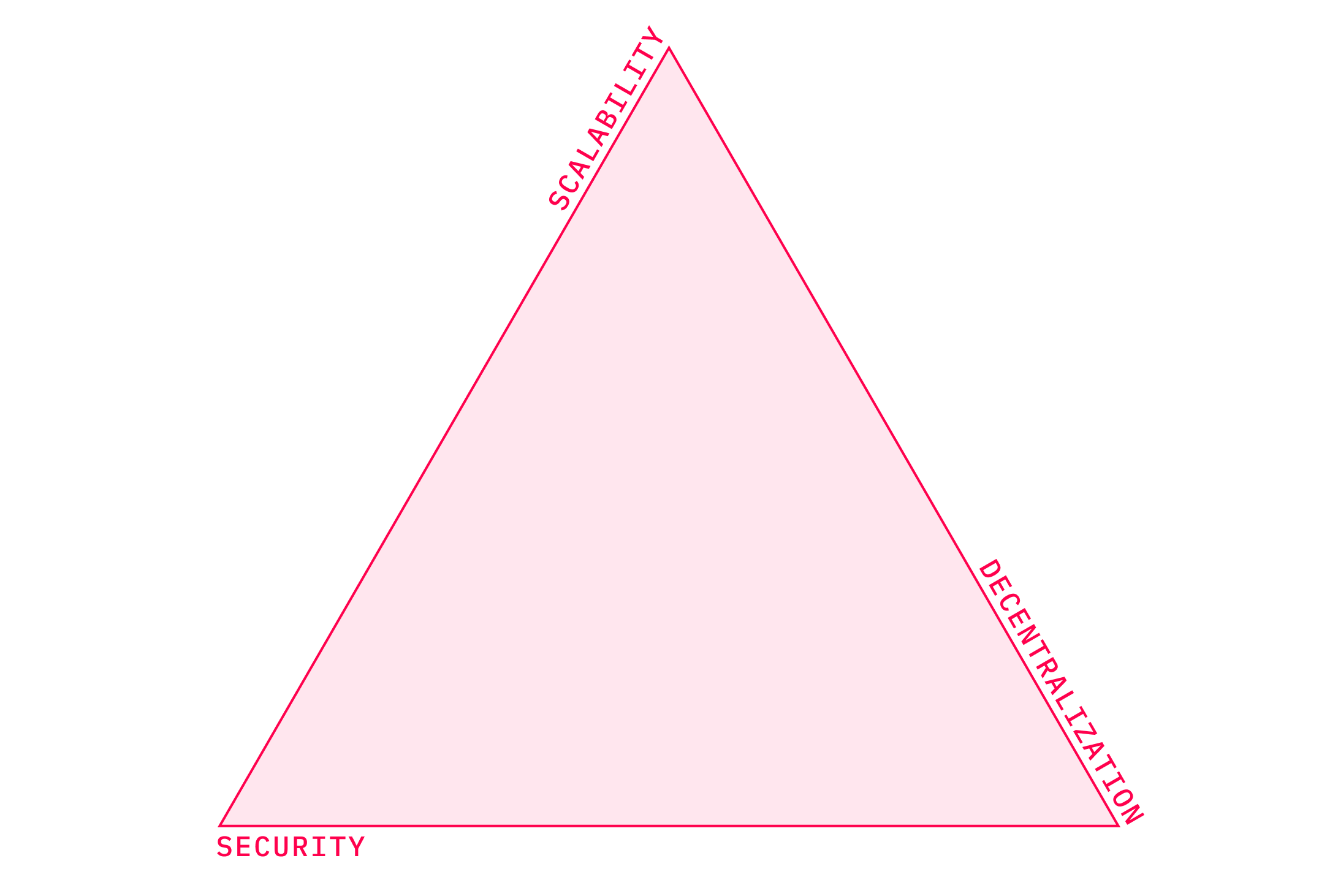

Designing a blockchain network entails a plethora of technical details that need to be figured out. On the highest level, this set of trade-offs is known as the Blockchain trilemma. The term was coined by Vitalik Buterin, and it involves three components: decentralization, security, and scalability. As the name suggests, the problem is that you can’t have them all at once, and you can only focus on optimizing two of them. Networks that tend to optimize for scalability often do so at costs of decentralization. A typical example of this is EOS. While boasting, in their words, “industry-leading speed and latency in transactions and throughput”, the EOS network consists of only 21 validating nodes. Of course, none of the three parameters is binary and we can talk about various degrees to which they are satisfied. All of that to say that it is hard to know how many nodes we need to proclaim a network decentralized (enough). Although, we certainly can say that EOS is less decentralized than most of the other smart contract platforms. We will get back to EOS in the next few chapters. For now, let’s explore a bit each of the three dimensions.

Decentralization

While decentralization is the most frequently used word in the cryptocurrency space, its definition as well as possibilities of quantification are quite poor. The reason for this may be that when you hear people speak about decentralization they may very well speak of different aspects of decentralization, even though often they refer to transaction processing. I will help myself here to words from Buterin’s legendary blog post on this issue. He mentions that there are three separate axes when it comes to decentralization that are quite independent of each other:

Architectural (de)centralisation — how many physical computers is a system made up of? How many of those computers can it tolerate breaking down at any single time?Political (de)centralisation — how many individuals or organisations ultimately control the computers that the system is made up of?Logical (de)centralisation— does the interface and data structures that the system presents and maintains look more like a single monolithic object, or an amorphous swarm? One simple heuristic is: if you cut the system in half, including both providers and users, will both halves continue to fully operate as independent units?

Applying these standards to blockchains in general, we can mostly agree that they are decentralized architecturally and politically since they are run on thousands of different computers that likely have different owners. But, they are centralized when it comes to logic, as there is a single state all the machines agree upon, in other words a single source of truth. This is a feature and probably the same reason why blockchain was created, as its magic lies in the combination of architectural decentralization with logical centralization. Buterin goes on to explain three reasons why decentralization is important here:

Fault tolerance— decentralised systems are less likely to fail accidentally because they rely on many separate components that are not likely.Attack resistance — decentralised systems are more expensive to attack and destroy or manipulate because they lack sensitive central points that can be attacked at much lower cost than the economic size of the surrounding system.Collusion resistance — it is much harder for participants in decentralised systems to collude to act in ways that benefit them at the expense of other participants, whereas the leaderships of corporations and governments collude in ways that benefit themselves but harm less well-coordinated citizens, customers, employees and the general public all the time.

These are commonly agreed-upon benefits of decentralized systems. As (different) machines are run in different locations by different people, they are less likely to be faulty, attacked, or colluding. But it gets interesting when we introduce something known in engineering as “common mode failure”. The term refers to events that are not statistically independent, as failures in different parts of a system (or different systems) may be caused by a single variable due to environmental conditions. The point Buterin makes here is that it is important to consider this when discussing decentralization of blockchain networks in general as they are prone to common mode failure scenarios for multiple reasons. For instance, since all nodes run the same client, they are all potentially vulnerable to the same single bug within the software. The software, as well as the principles it is built upon, are designed by the team of developers who are potentially corruptible. Or let’s take Bitcoin itself. Most of the miners are in one country: China. Therefore, we can speculate that the Bitcoin network is, in theory, vulnerable to a single political decision on whether or not to seize all the mining equipment, which several Chinese regions began doing in the spring of 2021. Speaking of which, the mining hardware is mostly produced by the same company, and may be bugged (intentionally or not) as well, making the majority of the miners potentially vulnerable to the same bug.

Of course, it is not only PoW systems that suffer from similar symptoms. As with most of the altcoins that are based on PoS, many end up hoarded by the core fans. In other words, it is the case in any cryptocurrency network that a small amount of addresses is in possession of the majority of the coins. This can be assessed usually by looking at the so-called “rich list” in any particular network. Based on the data from mid 2019, out of all addresses in the Bitcoin network, 0.57% of them own 86.73% of all coins in circulation. In the case of Litecoin, similarly, 86.34% of coins are owned by 1.6% of the addresses. Coins like Dash are just as concentrated, as 89.84% of them belong to 0.79% of addresses. We can see this pattern in the vast majority of cryptocurrencies, as usually 1% of addresses are in charge of 85-95% of coins. While in PoW systems such a coin concentration does not have implications to political decentralization of a system, in PoS networks it does. However, even here it’s not so simple as there are two important factors we must take into account. First, coins tend to be concentrated at cryptocurrency exchanges which hold large amounts of them on just a few addresses. Second, at the same time, since it is easy to generate multiple addresses at will, we cannot assume that each particular address has a different owner. These two factors make assessment of wealth concentration in cryptocurrencies quite difficult, even though it is likely that the first is prevalent.

To assess decentralization properly, we need to look at it from many different angles. In addition to the aforementioned aspects of decentralized networks, an ideal decentralized network should also be built on (cryptographic) principles researched by multiple teams, and developed by multiple teams employed by different companies (or volunteers). I’d argue that, however far-fetched these criteria seem to be, they are likely only fulfilled by Bitcoin and Ethereum. Ideally, a network should also have multiple competing implementations that are mutually compatible, like we see in the case of the Lightning Network. One of the ultimate preconditions to all this is knowledge of the protocol being widely spread, and it being accessible not only to the community of developers, but the public as well.

Security

Decentralization is often used interchangeably with security. While there is a strong correlation between the two, security in a broader sense may entail more than just blockchain’s defensibility against a plethora of attacks from external sources. Let’s look at smart contracts on Ethereum. Even though they don’t directly relate to the security of the network itself, if we look holistically at different smart contract networks, the choice of a programming language may have implications to overall security assumptions. In June 2016, Coindesk released what was, until then, their most detailed report on Ethereum. It found that Ethereum’s (Solidity) smart contracts are in general 10 times more buggy, and thus less secure, than typical enterprise software. This was the case because Solidity was a new language and most of the developers working with it had relatively little experience. We can see this being addressed by efforts to allow writing of smart contracts on Ethereum in different languages. Parity Technologies, a company founded by Gavin Wood, for example, worked on a library called Fleetwood to make smart contracts in Rust as convenient as in Solidity. The efforts to create a new language with more simple syntax, such as Vyper, had a similar goal: to make smart contracts on Ethereum more secure.

Beside these “small” nuances, there is a wide spectrum of attack vectors any blockchain network will need to withstand. In principle, there are two major ways to control the network: taking control of information flow between peers, or having the majority in terms of the network’s consensus. The 51% attack relates to the latter, and it is likely the one most often discussed in the crypto community, even though it is rarely performed. As suggested by its name, it involves taking control of at least 51% of the network’s hash rate in PoW systems, or coins in circulation, in PoS systems. In either of the systems, it is the most costly attack of all. Once successfully executed, the adversaries may potentially reverse their transactions, prevent transactions of others to be confirmed, and perform double spend transactions. It is important to mention that they could not steal someone’s money by forging false transactions, as having hashpower does not enable them to forge digital signatures.

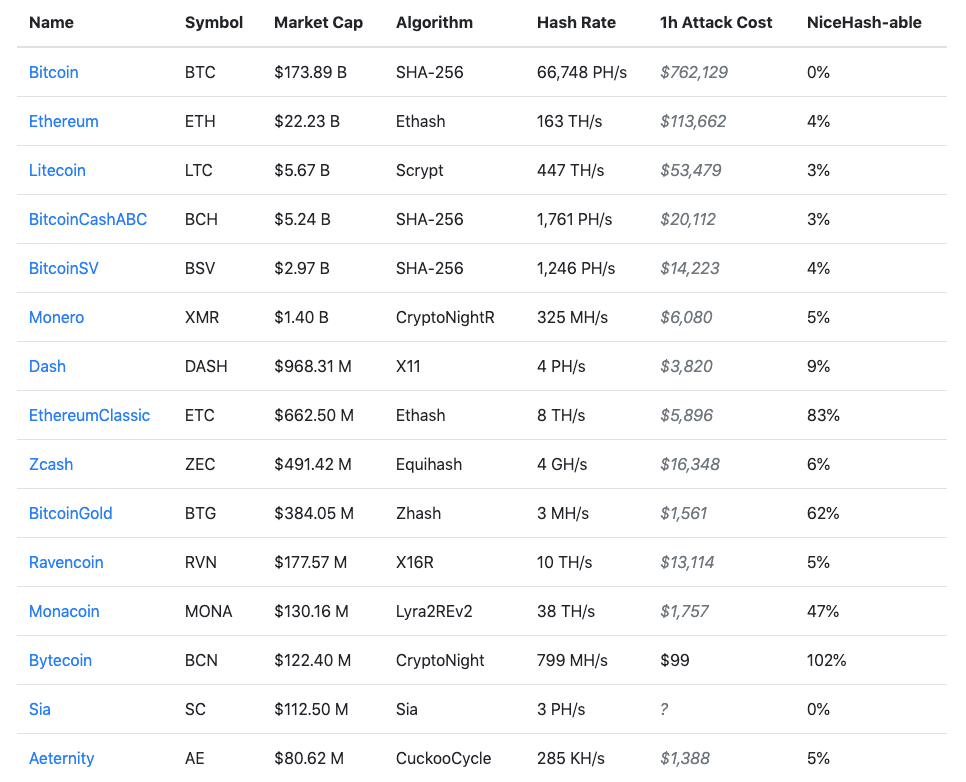

When comparing costs of attack for different PoW chains, tools like Crypto51.app are useful as they track how much it would cost, in theory, to perform such attacks on different coins. For an illustration, here is a snapshot from the end of July 2019. The difference between the costs of attack on Bitcoin versus other cryptocurrencies is vast. The costs are calculated using listed prices for different algorithms on Nicehash, the largest crypto-mining marketplace. That brings us to another aspect worth mentioning when assessing security properties of a blockchain network, especially with regards to PoW systems. Notice the last column in the table below. It shows how much of a network’s hash rate is available at disposal in Nicehash. In short, how much you can immediately buy when you decide to do so at any given moment. Having 0% means that an outside attacker would have a tough time to achieve the hash rate needed to perform the attack. It may indicate either the network’s robustness or mining equipment scarcity. For instance, Bitcoin ASICs are notoriously scarce pieces of hardware. While other coins are often mined with a GPU, which is usually somewhat more accessible, in a mining frenzy such as in the winter of 2017, purchasing new equipment might be equally difficult.

Source: Crypto51

The most prominent instance of a 51% attack was executed on Ethereum Classic (ETC) in January of 2019. At the time, ETC was the 18th largest cryptocurrency according to market capitalization. The attacker was able to take over roughly 60% of the network’s hash rate, which was around 9 TH/s (or 9 trillion hashes per second). To put it into perspective, to perform a similar attack on Ethereum, the attacker would have needed 20 times more computational power; on Bitcoin he would have needed 4500 times more at the time. This would come with a pretty hefty price tag. Note, this happened in the period in which prices, as well as hash rates, were reaching a local bottom. Half a year later after the attack, Bitcoin’s hash rate would double, while both Ethereum networks would stay at approximately the same level.

While some types of attacks are well known from traditional information systems, such as Distributed Denial of Service (DDoS) or a Sybil attack, there are some more specifically related to open blockchains. Let’s take an example of a Denial of Service attack that usually aims to make an online service unavailable by overwhelming it with traffic. This causes the computer, or in our case the network, to be overloaded with requests beyond a capacity it can handle. In open blockchains, this can be done by sending lots of junk data to a node. Such a node would be overwhelmed and wouldn’t be able to process regular data. There are multiple ways for blockchain networks to deal with this. Typically, blockchains have countermeasures on two levels: protocol and node. Among protocol-based measures, we include for instance the cap on the maximum amount of data that fits into the valuable blockspace, also known as block size. As discussed in the previous chapter, the 1 MB limit in Bitcoin has been controversial for many years and caused countless heated debates on forums, subreddits, Twitter and public conferences. This topic alone divided the Bitcoin community into many tribes. Block size, though, is just one of many parameters that are capped. In the case of Bitcoin there is a cap on the maximum:

- number of signature checks that a transaction or block may request,

- script size (10 kB)

- size of value pushed while executing a script. (520 bytes)

- number of keys in multisig transactions (20 keys)

- number of “expensive” operations in a script

- number of stack elements stored (1000 elements)

These are all hardcoded limits in the protocol itself to make DoS attacks more difficult. But that’s not all. There is a set of clever rules that determines the behavior of nodes and helps to prevent such attacks from being executed. Same or equivalent rules were implemented by many altcoins, and if there were changes made, they emulated similar behavioral patterns. To achieve this, nodes typically:

- don’t forward double-spend transactions/blocks

- don’t forward orphan transactions/blocks

- don’t forward the same block/transaction to the same peer

- don’t forward or process non-standard transactions

- penalize peers that send invalid signature messages

- ban IP addresses that misbehave

- disconnect from peers that don’t comply with the rules

Yet, even these measures won’t stop an adversary from launching a DoS on a network or nodes should he decide to send thousands of very small transactions in order to fill the blocks, and consequently, the mempool. This would increase confirmation time, causing possibly significant delays to other, more legitimate transactions, and thus slow down the entire network. We call such an activity a Flood attack. While very easy to perform, it is quite expensive to sustain for a long time due to transaction fees. There is a suspicion that some parties immersed in such attacks did so while campaigning for a bigger block size limit in Bitcoin, even though it has not yet been confirmed or proven, largely because it is hard to distinguish between legitimate and “malicious” transactions.

Other possible long-range attacks include an Eclipse attack, in which a malicious user gains control over a node’s access to information flow in the network through possession of a large number of IP addresses and machines. This way, the adversary can effectively isolate the victim from the rest of the network as he monopolizes all of the outgoing and incoming connections of the victim. If performed on enough nodes, this potentially allows a hacker to split the mining power, and thus perform a 51% attack with less than 50% of mining power, as well as double-spending transactions.

Another interesting vulnerability that might be theoretically exploited, even if a node maintains communication with honest peers, relates to time jacking. As you can imagine, the concept of time in a technology like blockchain is extremely important. Transactions must be ordered, and all nodes need to essentially agree on the particular order of every single transaction. From a node’s perspective, time determines validity of new blocks. This is where it gets a bit tricky, as Bitcoin is a truly global and borderless system spanning across the globe. As you might guess, this results in a situation where nodes are in multiple time zones. When peers establish connections between each other, they exchange their system time. This way, each node keeps what we call a counter. A counter is established by the median network time of the node’s peers. This allows a potential adversary to announce an inaccurate timestamp when connecting to a node, so they may alter its network time counter. Such wrongdoing may deceive the node and lead it to accept alternate blocks, which ultimately may increase the chances for a successful double-spend. To my knowledge, there haven’t been (significant) instances of such attacks recorded or reported on.

Scalability

Scalability is an important factor because it eventually determines the capacity of any network. Optimizing for it means that the network aims to ensure that applications running on top of it do so quickly and seamlessly, while supporting a high volume of transactions. This makes them less likely to break down in situations when demand and transaction traffic rise to unusual levels. As mentioned above, on-chain scalability usually comes at the cost of sacrificing the other two dimensions, and typically it is decentralization. I’d argue that scalability is not of the most significant importance for open blockchains, as I believe their ultimate survival depends on being decentralized and censorship resistant. We have enough scalable alternatives to blockchains, such as traditional relational databases. Blockchains were not invented to compete in scalability with those systems, and will likely always lose due to their (more or less) decentralized nature.

However, there is a valid argument that many of humankind’s inventions have morphed over time along with their main utility. Let’s be honest, DARPA researchers could have never imagined that one of the killer apps and pastimes for people on the Internet would be cat pictures and memes. Yet, I believe it is of the utmost importance for truly open blockchains to optimize mainly for decentralization at the cost of scalability, at least as far as layer one is concerned. This is in line with what we can see in some of the most robust blockchain networks where scalability goals are meant to be achieved through upper layers built on top of the root blockchain. In the case of Bitcoin it’s the Lightning Network, and in Ethereum’s case it is Plasma or State Channels.

We have discussed broadly what kind of trade-offs need to be considered when crafting blockchain networks. While there are many technical aspects and properties that most cryptocurrencies share, let’s explore how decisions related to the blockchain trilemma are translated on the protocol level. There are a few parameters that typically differ in various blockchain implementations: block size, block time, monetary policy, consensus protocol, and fungibility.

Block Size

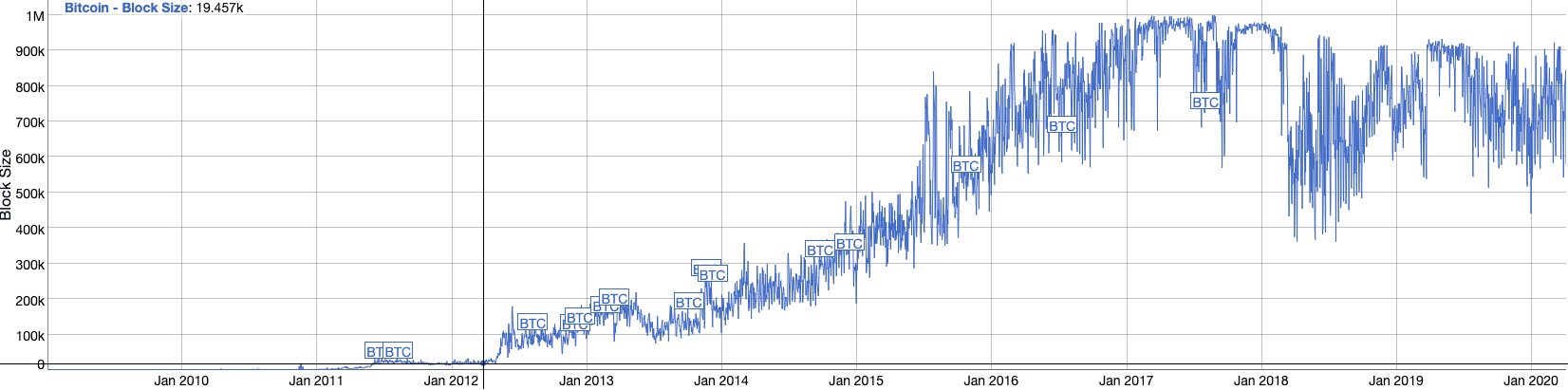

A significant part of the discussion about scaling was initially centered around this crucial parameter: block size. Especially in the case of Bitcoin, it caused a lot of controversy, heated debates, personal attacks, and ultimately division of the community as well as Bitcoin’s blockchain itself. Regardless of the side one takes in this debate, it is important to recognize the relevance of this parameter. Bitcoin started off with a hardcoded limit of 1 MB per block. Note, it doesn’t mean that each block was of such a size, as this referred only to the maximum amount of data that could be stored in a block. In fact, for many years the blocks were far from reaching the limit as you can see in the chart below. Any block that would exceed the limit would be automatically rejected by the nodes. Keeping the limit as low as possible has been, as many in the community believe, of the utmost importance for maintaining the robustness of the node count, and thus decentralization of the network. The lower size of blocks and the blockchain itself, the easier for ordinary people to run the full nodes. This number has a direct impact on one of the most used metrics in the cryptocurrency space: transactions per second (TPS). The bigger the blocks, the higher the transaction output.

Source: bitinfocharts.com

As there is not a clear answer on what the ideal block size should be, many cryptocurrencies have implemented an arbitrary block size limit, especially those that use Bitcoin’s code base in their efforts to justify their fork. Part of the Bitcoin’s community forked off in August 2017 to create Bitcoin Cash, and increased the block size to 8 MB. This was later increased further to 32 MB. At the time of writing, another fork from Bitcoin Cash, Bitcoin SV is set to adopt the maximum size of their blocks up to 2 GB. That said, there are some exceptions to the fixed block size. Ethereum, for instance, does not have a block size limit per se, but it has a gas limit enforced by the network’s consensus and is dynamically adjusted by miners. We will discuss this more in the next chapter, but for now it is important to note that while the protocol enforces the rate at which gas limit may increase or decrease, based on the previous block, there is no ultimate cap on the block size. We can see a similar pattern in Monero, where the block size is a function of the size of the previous 100 blocks. It allows the blocks to dynamically adapt to the current traffic needs, while maintaining a certain level of scarcity within the block space, and thus creating a (transaction) fee market. Perhaps counterintuitively, block size does not have a significant impact on the size of a blockchain itself, as this is determined mainly by size, amount, and complexity of transactions on the network and it can be optimized through various techniques including digital signature schemes.

Block Time

Block time is another metric that may differ when comparing various cryptocurrencies. It describes the amount of time for which, on average, a new block is created in a network. It is often used interchangeably with so-called confirmation time, the time after which we can consider a transaction to be confirmed. While intuition here might suggest that faster is better, it is important to take into account the security aspects of confirmation times, demonstrated in the beginning of this chapter.

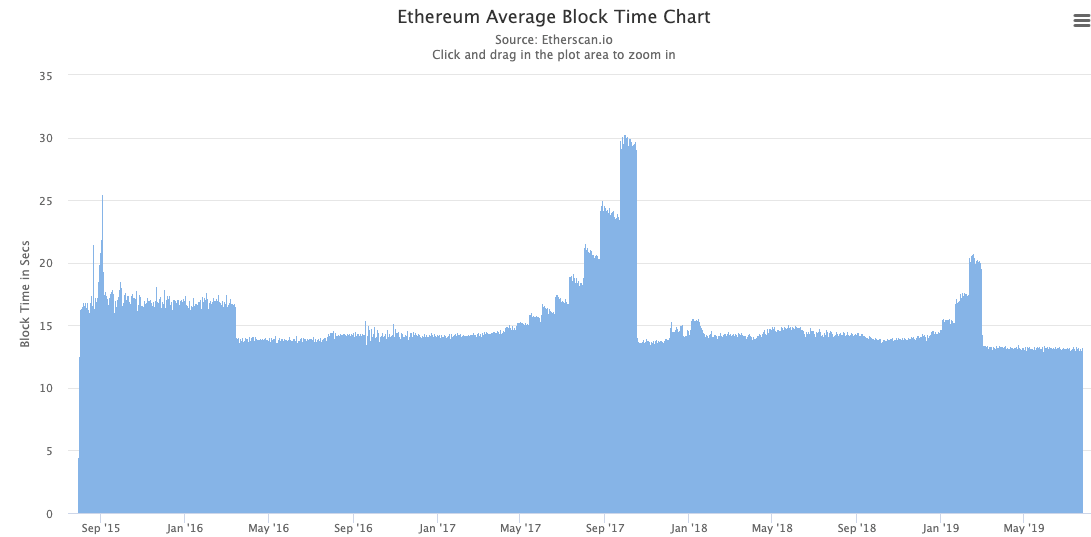

Block time usually ranges from somewhere between a few seconds and Bitcoin’s 10 minutes. Again, this variable is mostly dynamic. This is because it is determined by the level of mining difficulty, which adjusts based on the network’s hash rate in certain time periods (measured in blocks). In Bitcoin, the network targets the block time of 10 minutes and adjusts difficulty accordingly every 2016 blocks, or roughly two weeks. By the nature of Proof-of-Work systems, where miners calculate computational problems, this number is probabilistic. For instance, Buterin suggested in the Ethereum white paper a block time of 12 seconds, but in reality we have seen average block times between 14 to 17 seconds, apart from the period of the CryptoKitties frenzy when block times got as high as 30 seconds, as seen in the chart below.

Source: etherscan.io

Monero’s target for block time is 2 minutes, which corresponds also with actual average block time quite consistently. Litecoin, Dash and Zcash target 2.5 minutes while PIVX and its forks validate blocks in 60 seconds. While it may be in the network’s interest to have the block time low in order to have faster confirmation times, there are certain risks associated with pushing this number too low. Due to the nature of P2P networks, there is a certain time needed to propagate the information (for example about new blocks in this case) across the network of computers in different continents with different connection quality. As this may take a few seconds, low block time may result in a higher rate of orphan blocks, the blocks which are mined but eventually not accepted into the longest chain. Orphan blocks result in a miner’s work and resources being wasted, and therefore are not desired to occur frequently.

Monetary Policy

Monetary policies often differ in cryptocurrencies. This is for various reasons. While Bitcoin came with a fixed limit of 21 million coins that will be ever in circulation as an important, and most likely unchangeable, feature of “hard” money, other cryptocurrencies may come with different assumptions about inflation. Zcash and Decred copied the supply cap at 21 million, Dash capped the supply at almost 19 million, and Litecoin at 84 million. Monero, on the other hand, has employed a constantly decreasing block-reward. However, when it reaches 0.6 XMR per block around 2022, the block reward will be fixed from that point onwards. This will result in a continuously decreasing, and never-ending rate of annual inflation. While most of the projects that underwent ICOs implemented a fixed supply for their coins, including smart contract platforms such as Tezos, Tron, Nem, Neo, EOS, Cosmos, there are again some exceptions here similar to Ethereum. One of the curious traits of Ethereum has long been the fact that its monetary policy has not been announced. The community has been focused on improving the protocol features, mainly development of layer two solutions and transition to Proof-of-Stake, and left this decision for later. There was a rough consensus that Ethereum will keep a certain annual inflation rate, likely between 0.5% and 2%. The reasoning behind the inflation rate is that Ethereum has not been meant to be money or a payment system as we know it, but rather a globally decentralized virtual machine for decentralized applications. In early 2021, when EIP1559 was due to be accepted by the community, it seems that in the future, Ethereum could even achieve a deflationary emission schedule.

Consensus protocol

As introduced in the first chapter, the Byzantine Generals Problem, described by computer science researchers Leslie Lamport, Robert Shostak, and Marshall Pease, has for long been a difficult problem to solve, especially when it comes to permissionless distributed networks. While the Nakamoto consensus based on Proof-of-Work represented a breakthrough when it was conceived in 2009, since then there have been numerous design principles proposed as its improvement.

In simple words, a consensus algorithm is a mechanism through which a distributed network of nodes agrees on the state of the ledger. In the absence of central authority whose roles are distributed to every single node in the network, a coordination mechanism needs to be present. Every node has a slightly different view of the network and may verify different sets of transactions at slightly different times (milliseconds). It is crucial that the entire network agrees on the same order, number, and validity of transactions. In an environment where globally distributed anonymous nodes constantly join and leave the network, this is a rather difficult task. Pre-bitcoin solutions were able to deal with this problem as long as the network consisted of a set of known nodes that knew each other’s identity.Nakamoto came up with a clever solution in Bitcoin when he decided to use the Proof-of-Work mechanism as a tool to achieve this consensus. In each round that takes 10 minutes on average, the network chooses one random node from all those that mine (and validate) transactions. Miners compete against each other by computing a cryptographic puzzle, and whoever gets the solution right and proves it to the network wins the virtual lottery and the right to generate the new block. Not only does one receive a reward in the form of freshly emitted bitcoins, and transaction fees in the block, but his “version of truth” is accepted by all nodes, and his block is added to the blockchain.

While Nakamoto’s consensus assigns the right to generate blocks based on a mathematical proof of computation work, alternative consensus mechanisms may do so based on different criteria. The invention of Bitcoin gave a significant push to this space and various types of algorithms, backed by serious academic research, and have since grown rather popular. Currently, it is almost impossible to map the entire spectrum of different consensus algorithms out there. The family of Proof-of-Work algorithms alone has grown significantly in the past years, and to cover them all would be far beyond the scope of this book, let alone this chapter. The same goes for an ever-increasing research on Proof-of-Stake algorithms. As various aspects of Bitcoin’s PoW are covered in the previous chapters, the following paragraphs aim to map the evolution of the most prominent consensus protocols, and break down conceptual differences between them while leaving pointers for curious readers so they can explore further.

Before we discuss consensus protocols further it is important to get familiar with the notion of transaction finality. When swiping a credit card after purchasing, say a Turmeric latte, the vendor is immediately willing to commence the magic ritual of blending almond milk with golden Turmeric powder, full of delicious curcumin, that will result in the delicious potion you just ordered. One feels comfortable doing it in a matter of seconds as they consider the transaction to be final. Cash provides an instant finality while blockchains may not. As we know from the Nakamoto consensus in Bitcoin, there is a chance that a transaction can be reverted if the network is, for instance, under a 51% attack. This is especially true if the transaction has zero confirmations. With each new confirmation, in other words block, the probability of transaction reversal is significantly smaller. We say that systems with such properties have probabilistic finality. This does not necessarily need to be a problem, as it just simply means that the network is a bit slower in terms of transaction confirmations. But if we talk about blockchains, it might mean that such properties may deter certain applications to be built on top of such a network. Therefore, some platforms tend to improve on this property by designing their systems in a way that provides absolute finality. These are usually based on PBFT protocols we will discuss later in the following paragraphs. Tendermint, for example, is recognized to be at consensus with absolute finality as its transactions are considered finalized immediately as they are included in a block and added to the blockchain. Some systems that use Proof-of-Stake with a slashing mechanism (including Casper FFG) are considered to provide economic finality because it is extremely costly to attempt blockchain reorganization.

Proof-of-Work

Consensus mechanisms are ultimately about application of various game theoretical and threat models. A Proof-of-Work algorithm has been proven in the past decade to have the right incentives in place for a network to operate in the wilderness of the open Internet. The beauty of the protocol is that even if an adversary amasses more than 51% of a network’s hash rate, usually it is in his economic self-interest to further follow the protocol rules as it is more profitable, unless there is a huge double-spend transaction to be made. Any attempt of an attack would end up being very costly, especially with a hash rate such as Bitcoin’s. It is assumed that only a very large agent, such as a national state with a few billion dollars to waste, could successfully attack the PoW network of that scale.

Within the realm of PoW-based networks, there has been an ongoing debate about the pros and cons of ASIC resistance. ASICs are specialized hardware designed for a single purpose: mining specific cryptocurrency by tools much more efficient than general purpose mining hardware. Some cryptocurrencies have long been trying to avoid the situation where ASICs for their particular algorithm would exist. Monero, for instance, did so with frequent hard forks that happened almost every six months while tweaking their algorithm regularly. The reasoning here goes like this: if a coin is mined with more widely available hardware, it will result in the hash rate being more decentralized, which ultimately would make the network more secure. This might be a double-edged sword, though. Universal mining hardware can be more easily switched to another cryptocurrency that uses the same algorithm, which may result in hash rate volatility. Such behavior is actually quite common among GPU miners.Meanwhile, the existence of ASICs implies both increased hash rate centralization on one hand, as well as more “loyal” miners on the other, and thus stable security assumptions of the network. Hashrate marketplace proliferation has been an increasing and important trend since late 2017, as it significantly lowered the barrier of entry for mining, especially for the coins mined with graphic cards. It allows for more efficient resource allocation, and thus makes the entire mining market more competitive. At the same time, it makes the hash rate rental easy and accessible, which may cause an increased rate of attacks to PoW networks in the future.

Owners of ASICs that may be used exclusively for mining of a particular coin are less incentivized to participate in hash rate marketplaces. This is because they care more whether the hardware in question could be used to attack their coin, and thus result in erasure of their sole source of income, whereas universal hardware owners don’t need to care that their hardware might be used for a plethora of other coins. We can expect that, sooner or later, increasingly more ASICs will be available for the biggest coins, which may result in a GPU hash rate delivered at lower costs for whatever the renters will see suit. In 2019, we saw the emergence of ASICs for Zcash and Ethereum, some of the largest consumers of GPU hash rate. In reaction to this, the Ethereum Foundation and developer community decided to introduce Progressive Proof-of-Work, also known as “ProgPoW,” as a countermeasure. At approximately the same time, in January of 2019, we witnessed a significant spike in Monero’s hash rate, indicating the presence of ASICs. Again. Monero’s early 2018 fork to get rid of ASICs was followed by a dramatic hash rate drop of 80%. The network was weakened, and immediately successfully attacked. Their reappearance in 2019 ignited discussion within the community, as to whether it would be appropriate to increase the rate at which the Monero network forks to every quarter. Monero’s lead developer, Riccardo Spagni, adds his comments:

All that’s happening is Monero is stalling ASICs, not preventing them. Hopefully it’s stalled until ASICs are commoditized!

Either way, ASICs seem to be inevitable for PoW-based cryptocurrencies once they reach a certain level of market capitalization. However, this shouldn’t be viewed as a bad circumstance. In cases where this is found not to be desirable, interesting propositions might be offered by innovative modifications to PoW, that effectively create hybrids. Decred, for instance, introduced a rather innovative hybrid system consisting of both PoW and PoS, sometimes referred to also as Proof-of-Activity. While blocks are still mined by regular miners, shortly after that they are voted upon their validity by the stakeholders. This is done by buying voting tickets which result in locking up a certain amount of coins in the network. In each round when a block is created, five random tickets are selected from the ticket database. If a majority of them agree, the block is validated. Such a system arguably increases overall security of the network and might be a possible solution for more pure PoW-based networks with smaller hash rates. Another recent innovative protocol introduced by two Cornell professors Elaine She and Rafael Pass, known as Thunderella, combines hybrid properties of a PoW-based Nakamoto style chain with a PoS system on top of it.

Another interesting tweak, called Delayed Proof-of-Work (dPoW) was introduced by Komodo in 2016. It allowed one blockchain to take advantage of the security guarantees provided by another chain with a bigger hash rate. Transmission of data between the two chains was done by a set of 64 notary nodes which were voted on by the stakeholders. Such a system is partially trusted and largely resembles Delegated Proof-of-Stake which we’ll discuss in the next paragraph. This idea, however, was later elaborated on and developed further in an allegedly decentralized, trustless, and permissioned manner with Veriblock’s introduction of Proof-of-Proof (PoP). Their white paper was released in early 2018, and main net launched in March 2019. Veriblock essentially allowed any other blockchains “parasitising” on Bitcoin’s security guarantees by publishing data directly into the Bitcoin blockchain using OP_RETURN opcodes which may be used to store any arbitrary data. Meanwhile, Veriblock incentivized parasitizing altcoin’s miners to publish data into Bitcoin as well as to publish the proof that such data was published and confirmed in Bitcoin, into Veriblock’s blockchain. Ultimately, an altcoins’s state of the ledger would be snapshotted in Bitcoin. Veriblock started to make waves even before the main net launch. In February of 2019, while still being on the test net, Veriblock’s transaction consumed significant space within the Bitcoin blocks. It was estimated that between 20-30% of all daily Bitcoin transactions originated at Veriblock. Some of the Bitcoiners watched with great dismay how the Magnum opus of the crypto world was spammed by altcoins. However, it did help that former Bitcoin contributor Jeff Garzik was part of Veriblock's team, along with one of Ethereum’s co-founders Anthony Di Iorio.

Proof-of-Work, mainly in Bitcoin, has been massively misunderstood and criticized in mainstream media and by proponents of the “green” solutions for being too wasteful when it comes to natural resources. However, the truth is that it is very likely still the most superior way to secure a truly decentralized blockchain network, and this may not change in the near future. We must remember that making crafting of the bits costly was a design feature. It is my conviction that there is room on this planet for at least one robust PoW system that provides strong security guarantees for the blossoming of the new, free, and censorship resistant parallel financial world. Furthermore, not only that quite some chunk of the electricity powering the system comes from renewable energy, but the sole existence of it creates vigorous incentives to explore and increasingly use such energy. This proposition is certainly worth the energy burned. After all, humankind allocates significant resources on many less useful lunacies. When seeking where to save electricity, Bitcoin is on the list far below the Christmas lights, The Kardashians, and similar distractions.

Proof-of-Stake

As we mentioned in the beginning of the chapter, PoS was implemented for the first time in Peercoin. Since then, it has been used in many other cryptocurrencies. Some of the early platforms using it included NXT and Bitshares. Proof-of-Stake was designed as a “green” alternative to PoW, since it did not involve solving expensive computations. Instead, your likeliness of minting (PoS jargon for mining) new blocks was proportional to your stake in the network, measured by the amount of coins you own. In simple terms, if you owned 10% of all coins in circulation, you would likely mint roughly every 10th block. Since its inception, PoS has often been criticized for being inferior in terms of security guarantees it provides, when compared to PoW. This was mainly because of what has become known as “nothing-at-stake-problem”, which refers to a situation when block generators have nothing to lose should they decide to sign two different blocks at the same time, and thus working on two different ledgers, effectively forking the network. This was easy to do since working on a block did not cost virtually anything. Several proposals have been made in order to address this vulnerability.

One of them was checkpointing, which served as a protective measure against blockchain reorganization. Checkpoints and snapshots of the ledger were hardcoded into the standard client, and thus all transactions that occurred before the checkpoint could be considered irreversible. There was an obvious problem with this kind of solution. It introduced a trusted entity such as the checkpoints that were done by the developers. This was hardly acceptable for many, even though some teams were willing to operate with this model. Interestingly enough, the genesis of blockchain check pointing goes further than the inception of PoS systems, as the following post on Bitcointalk reads:

…Added a simple security safeguard that locks-in the block chain up to this point……The security safeguard makes it so even if someone does have more than 50% of the network's CPU power, they can't try to go back and redo the block chain before yesterday. (if you have this update)I'll probably put a checkpoint in each version from now on. Once the software has settled what the widely accepted block chain is, there's no point in leaving open the unwanted non-zero possibility of revision months later.

The post is from July 2010, and its author is none other than Satoshi Nakamoto himself. He too deemed that this feature would be helpful in the early days of the network’s bootstrapping. And for long, checkpoints were part of the main Bitcoin wallet releases until 2014 when Bitcoin Core stopped adding new checkpoints.

Another technique to mitigate nothing-at-stake-problem at an early stage of PoS is called age-based selection. In a few words, coins started to compete by minting new blocks just after they had held on an address for a certain period of time, say 30 days. To prevent very “old” coins from dominating the consensus, there was a limit set up where coins reached the highest probability to find the next block. In Peercoin it was 90 days. An eighty day “old” coin has more weight than many others. The coins older than ninety days, though, had the same weight.Later on, more sophisticated and arguably aggressive measures were incorporated, such as punishing bad validators by slashing their stakes. This was integrated in Tendermint and planned for Ethereum too. Interesting upgrades to PoS were also made by the team behind Dfinity.

Delegated Proof-of-Stake

Delegated Proof-of-Stake (DPoS) built on the previous ideas and modified the protocol in a way so that stakeholders would vote on “leaders”: a smaller quorum of nodes that would be in charge of producing blocks. Leader elections would occur periodically. A model similar to this one is representative democracy. It was created by Daniel Larimer, who utilized it in one of his first projects in 2013, BitShares. All of his previous entrepreneurial blockchain endeavours, Steemit and EOS, implemented it as well. From the Blockchain Trilemma perspective, the system is optimized for scalability and high transaction throughput, while being relatively more centralized. Throughout the years it was used in a number of cryptocurrencies, perhaps the most prominent one being Lisk.

Pure Proof-of-Stake

Pure Proof-of-Stake (PPoS) is a protocol built on the Byzantine consensus proposed by Silvio Micali and utilized in Algorand’s permissionless blockchain. It deploys Verifiable Randomness Functions (VRFs) to secretly select the block proposers and committee members. It claims to offer fast recovery from network partitioning and instant finality even though some reviews suggest it achieves only probabilistic finality.

Proof-of-Importance

Proof-of-Importance was implemented by NEM platform in 2015, and it improved simple PoS by adding multiple factors for consideration when evaluating a contribution.. That involved, for instance, the number of both incoming and outgoing transactions made by the node as well as the importance of the nodes transacted with. Probably the closest analogy would be Google’s pagerank system. For a node to be eligible for consideration and considered an important calculation, it would need to have at least 10,000 XEM coins.

Byzantine Fault Tolerance protocols

Practical Byzantine Fault Tolerance (pBFT) was one of the first algorithms designed to tackle the Byzantine Generals’ Problem. It was introduced at MIT in 1999 by Barbara Liskov and Miguel Castro. It allows for high performance, even though it is suited for consortium-based networks of known nodes. It is often modified with various tweaks like in Hyperledger and Tendermint, or even combined with other consensus algorithms such as PoW in Zilliqa.Federated Byzantine Agreement (FBA) is a similar protocol introduced to address some of the limitations of pBFT related to scaling. It is more fit for decentralized networks and it has been implemented in Ripple and Stellar. Delegated Byzantine Fault Tolerance (dBFT) was a modification of pBFT developed and implemented by NEO. The protocol is in many ways similar to dPOS.

Ourobros

Ourobros was introduced by Cardano as the first proof-of-stake protocol based on formal peer-reviewed academic research. Cardano was founded by Charles Hoskinson, who is also one of the co-founders of Ethereum. The math behind it is rather advanced and Cardano researchers believe Ourobros represents a technological breakthrough in the field of PoS systems mainly because of provable security properties of the protocol. This was challenged by many, including Larimer, who questioned the assumptions upon which the claim was made and he compared it to a slower and less secure version of DPoS in his peer review:

In other words, Ouroboros is a 400 pound bullet proof vest that doesn’t actually stop the real bullets.

Advisably, it should be taken with a grain of salt, because from a business perspective Larimer may have all the incentives to disapprove the competition’s security model and assumptions. Ourobros was also criticized by Buterin for having transaction irreversibility as probabilistic, and thus was unfit for smart contracts.

Avalanche

Avalanche is another protocol that utilizes a modified version of Proof-of-Stake, along with Directed Acyclic Graph system, and that boasts (as many others) to be the next generation consensus protocol that promises extremely fast transaction finality, low latency, high scalability and even lightweight clients while operating in a robust and decentralized network. However, unlike with many other protocols, Avalanche has been recognized and highly esteemed by many of the high-profile experts in the space. It was proposed in 2018 by a pseudo-anonymous team of authors known as “Team Rocket”, and it has been advocated for by one of the leading experts in the area of distributed networks, Emin Gün Sirer of Cornell University.

Alternative data structures

Multiple research teams have branched away from the paradigm of one single chain of blocks discovering and designing alternative ways to structure data within a distributed system. Directed Acyclic Graph (DAG) is used in a plethora of cryptocurrency projects as it is highly scalable due to its non-linear structure that represents a major shift compared to linear structures we usually see in blockchains. While in some literature DAGs refer merely to a particular data structure, some authors use the term for the blend of data structure and some additional features that form a standalone consensus protocol. Therefore, DAG-based protocols may or may not involve actual PoW mining. For instance, Ethereum uses DAG in its mining algorithm Ethash. There are multiple projects that deploy DAG in different ways. Let's briefly go through some of them.

Hashgraph is a patented protocol created by Leemon Baird in 2016 that belongs to the family of gossip protocols. Its design makes it a perfect fit for open permissionless networks even though this was attempted in a project named Hedera Hashgprah.

IOTA is, by the measure of market capitalization at the time of writing, probably the largest cryptocurrency utilizing an DAG consensus algorithm called Tangle. With a few technical hiccups in the past, IOTA has been subject to criticism for its supposedly temporary, centralized nature.

Maidsafe is one of, if not the oldest cryptocurrency projects out there, founded in 2006 by David Irvine, even though the network has not yet launched. In 2018, the project released an allegedly revolutionary highly asynchronous DAG-based consensus protocol named PARSEC (Protocol for Asynchronous, Reliable, Secure, and Efficient Consensus).

Nano (formerly Railblocks) utilizes a data structure called Block-lattice that allows nodes in a network to hold their own version of a ledger that only they can write to, while everyone holds a copy of all the chains. This is complemented by a DPoS-like consensus.

SPECTRE stands for Serialization of Proof-of-work Events: Confirming Transactions via Recursive Elections, and it was proposed in 2017 by a team of researchers at the Hebrew University of Jerusalem led by Aviv Zohar. It was proposed as a scaling solution for Bitcoin and other cryptocurrencies. It combines DAG and PoW, as it is designed to allow for multiple valid blocks being mined at the same time. More recently, the same author together with his colleague Yonatan Sompolinsky proposed new cutting edge protocols — PHANTOM and GHOSTDAG — that may extend SPECTRE.

As DAG-based technologies have gained traction in the past few years, while often being referred to as blockchain killers, some projects moved even beyond DAG bringing some brand new design principles for distributed networks. Holochain is one of them. It was proposed in 2017 and it combines some ideas from BitTorrent and Git, as it implements Distributed Hashed Tables, an agent-centric approach where each agent maintains its own history instead of sharing the same ledger with all peers. This means that Holochain is not a blockchain, since it does not enforce consensus on the data itself, but rather validation rules. They named their consensus Proof-of-Service. By some design features it may be considered similar to Hashgraph.

Radix emerged in late 2017 as one of the latest alternatives to blockchains and DAGs, introducing its new and innovative data structure called Tempo. While utilizing database sharding, and promising a great potential in terms of scalability, it has not yet been battle-tested, and thus is waiting to be proven in a production environment.

Other application-specific consensus protocols

In recent years there have been a dizzying amount of developments in the field of consensus protocols, and many researchers and developers have experimented with new models that would fit specific business challenges. These are just a few of them:

Proof-of-Authority (PoA) is a consensus that can be deployed on public blockchains, but often is used in permissioned ones as it relies on a typically smaller quorum of validators, with verified identity. It is used, for instance, in VeChain or one of Ethereum's testnets called Kovan.

Proof-of-Elapsed-Time (PoET) is another alternative for permissioned platforms, as it requires specialized hardware. In plain words, each node is assigned a randomly chosen time period for which it needs to wait. The first one to complete this task earns the right to produce the next block. It was invented by Intel in 2016 and is used in one of the Hyperledger platforms named Sawtooth.

Proof-of-Location (PoL) was designed for public permissionless blockchains and it allows nodes to record authenticated location data without GPS. It is used in the FOAM protocol that addresses the problem of location verification with an alternative system provided through an open network of terrestrial radios.

Proof-of-Storage refers to a consensus protocol where nodes need to prove to the network that they actually store the data they were paid for. It was implemented, along with PoW, in Filecoin, which builds on IPFS to help them achieve their bold vision of replacing HTTP as an Internet protocol.

As the research activity in this area is quite new and decentralized, it is virtually impossible to map all developments. There are many other unique protocols that are less well-known, and certainly some being hatched in development. Just to name a few more for curious minds: Proof-of-Space, Proof-of-Weight, RAFT, Proof-of-Retrievability, Proof-of-Time, Proof-of-History, and others.

Masternodes

A subset of cryptocurrencies deploy a small twist to their algorithms with the introduction of the second tier of consensus. This concept was pioneered by Dash, and later on it spread to other cryptocurrencies such as ZCoin, Horizen, Pivx, Secret Network (formerly Enigma), and more. The goal here is to create an additional layer of nodes — called masternodes — that provide extra services for the network, such as instant and private transactions. Masternodes often have higher requirements in terms of hardware, or more frequently, in terms of “stake” as they are required to lock a certain amount of coins in their wallet. This concept may be combined with PoW consensus as in Dash, or PoS as implemented in Pivx. In the former, masternodes must lock up 1000 coins in their wallet, whereas in the latter it is 10,000 coins so they can provide, and get paid for extra services.

Such nodes, much like in pure PoS cryptocurrencies, may be an interesting proposition from an investment perspective, as they are something akin to interest-bearing assets providing passive income. The lock-up mechanism also naturally pushes supply of coins in circulation downwards, which may drive the price up should the coin itself have fundamental value. Masternodes peaked in popularity in 2017, as new coins were popping up every day to fight for the attention of eager, fearless, and oftentimes clueless new investors flooding the space. As exchanges could not keep up with integration of new coins, they were often traded “OTC” on messaging platforms like Discord, Slack, or Telegram. New coins were created almost instantly by forking the codebase of more popular cryptocurrencies and adding minor tweaks. By 2021, the vast majority of these aspiring cryptocurrencies have since died.

Fungibility

The term fungibility refers to the ability of coins to be interchangeable similar to bank notes. It relates to the inability to track the coin’s history of previous owners. The transparent nature of blockchains makes it very difficult to achieve this property. The lack of fungibility may be one of the biggest attack vectors for Bitcoin and other cryptocurrencies in the future. If governments decide to track and punish cryptocurrency users, it may be very difficult to resist such an attack. Therefore, increasing privacy has been one of the top priorities in the crypto community since the beginning. Privacy in cryptocurrencies has multiple dimensions and aspects. Most of the focus is usually dedicated to transaction-level privacy. The ability to track who, where, and when a coin is spent allows us to create a transaction graph, using forensic data analysis that captures the history of ownership of each particular coin.There are, however, techniques that preserve confidentiality of each aspect of the transaction. There are various mixing techniques that exist on top of different coins, cryptographic tricks such as Schnorr signatures allow to hide the sender, confidential transactions allow users to hide the amount transacted, and stealth addresses that prevent disclosure of recipient’s address. There are also measures to prevent disclosure of the sender’s IP address, which is crucial when it comes to network-level privacy. We mentioned the Dandelion protocol in this regard in the previous chapter, and will discuss Kovri in the next one. Both of them may be complemented well by the most popular tool for IP masking: Tor.In addition, especially when we talk about smart contracts on blockchain, there are other relevant technologies that play vital roles in preserving confidentiality of data. Trusted execution environment (TEE) is one of them. It usually refers to an isolated area on the processor of a device that ensures that data is stored and processed in a trusted environment. The most popular solutions of this kind are the Intel SGX chips. Intel has the prevalent share of the market which represents an attack vector of its own. This is one of the reasons why trust hardware in general is not a popular option in the cryptocurrency community. Security guarantees provided by cryptographic techniques such as Multi-party computation (MPC) and homomorphic encryption represent a more sound foundation and approach to privacy. The trade-off here is usually that they are slow and costly, however. One of the pioneers experimenting with deployment of these technologies is Enigma. It is likely that in the future we will witness proliferation of MPC, partial, as well as fully homomorphic systems in the realm of open blockchains. Overall, there have been plenty of improvement proposals when it comes to privacy and fungibility on open blockchains. Some, initially proposed as an extension to the Bitcoin protocol, eventually evolved into separate coins and are discussed in the next chapter.